NVIDIA releases cloud-to-robot computing platforms for physical AI, humanoid development

The GR00T-Dreams blueprint generates data to train humanoid robot reasoning and behavior. Source: NVIDIA

At Computex today in Taipei, Taiwan, NVIDIA Corp. announced Isaac GR00T N1.5, the first update to its open, generalized, customizable foundation model for humanoid robot reasoning and skills. The Santa Clara, Calif.-based company also unveiled Isaac GR00T-Dreams, a blueprint for generating synthetic motion data, as well as NVIDIA Blackwell systems to accelerate humanoid development.

“Physical AI and robotics will bring about the next industrial revolution,” stated Jensen Huang, founder and CEO of NVIDIA. “From AI brains for robots to simulated worlds to practice in or AI supercomputers for training foundation models, NVIDIA provides building blocks for every stage of the robotics development journey.”

Humanoid and other robotics developers Agility Robotics, Boston Dynamics, Fourier, Foxlink, Galbot, Mentee Robotics, NEURA Robotics, General Robotics, Skild AI and XPENG Robotics are adopting NVIDIA Isaac platform technologies to advance humanoid robot development and deployment.

“Physical AI is the next wave of AI,” said Rev Lebaredian, vice president of Omniverse and simulation technology at NVIDIA. “Physical AI understands the laws of physics and can generate actions based on sensor inputs. Physical AI will embody three major types of robots, facilities like the factories and warehouses of our Taiwan partners, transportation robots, [industrial] robots, humanoids, manipulators, and AMRs [autonomous mobile robots].”

NVIDIA Isaac GR00T data-generation blueprint closes data gap

In his Computex keynote, Huang said that Isaac GR00T-Dreams can help generate vast amounts of synthetic motion data. Physical AI developers can use these neural trajectories to teach robots new behaviors, including how to adapt to changing environments.

Developers can first post-train Cosmos Predict world foundation models (WFMs) for their robots. Then, using a single image as the input, GR00T-Dreams generates videos of the robot performing new tasks in new environments.

The blueprint then extracts action tokens — compressed, digestible pieces of data — that are used to teach robots how to perform these new tasks, said NVIDIA. The GR00T-Dreams blueprint complements the Isaac GR00T-Mimic blueprint, which was released at the GTC conference in March.

While GR00T-Mimic uses the NVIDIA Omniverse and Cosmos platforms to augment existing data, GR00T-Dreams uses Cosmos to generate entirely new data.

Now accepting session submissions!

New models advance humanoid development

NVIDIA Research used the GR00T-Dreams blueprint to generate synthetic training data to develop GR00T N1.5 — an update to GR00T N1 — in just 36 hours. In comparison, it said manual human data collection would have taken nearly three months.

The company asserted that GR00T N1.5 can better adapt to new environments and workspace configurations, as well as recognize objects through user instructions. It said this update significantly improves the model’s success rate for common material handling and manufacturing tasks like sorting or putting away objects.

GR00T N1.5 can be deployed on the NVIDIA Jetson Thor robot computer, launching later this year.

“GR00t N1.5 was trained on synthetic data generated by the new Group Dreams Blueprint,” explained Lebaredian. “The biggest challenge in developing robots is the data gap. It’s easy for LLM [large language model] developers to train models because there’s a wealth of data out there. But robots need to learn on real-world data, which is costly and time-consuming to capture.”

“So instead of manually capturing, why don’t we let robots dream data?” he added. “Group Dreams is a synthetic data-generation blueprint built on NVIDIA Cosmos an open-world foundation model coming soon to Hugging Face. First, developers post-train Cosmos Predict with teleoperation data captured for a single robot task, like pick and place, in a single environment.”

“Once post-trained, developers can then use a single image and new prompts to generate dreams, the future of the original image,” Lebaredian continued. “Developers can prompt to pick up different items, like the apple here, or the can here. Then the dreams are evaluated and filtered by Cosmos Reason, a new physical AI reasoning model, and automatically labeled with action and trajectory data.”

Early adopters of GR00T N models include AeiRobot, Foxlink, Lightwheel and NEURA Robotics. AeiRobot employs the model to enable ALICE4 to understand natural language instructions and execute complex pick-and-place workflows in industrial settings.

Foxlink Group is using it to improve industrial robot manipulator flexibility and efficiency, while Lightwheel is harnessing it to validate synthetic data for faster humanoid robot deployment in factories. NEURA Robotics is evaluating the model to accelerate its development of household automation systems.

Simulation and data generation frameworks speed robot training

Developing highly skilled humanoid robots requires a massive amount of diverse data, which is costly to capture and process, noted NVIDIA. Robots need to be tested in the physical world, which can present costs and risk.

To help close the data and testing gap, NVIDIA unveiled the following simulation technologies:

NVIDIA Cosmos Reason, a new WFM that uses chain-of-thought reasoning to help curate accurate, higher-quality synthetic data for physical AI model training, is now available on Hugging Face.

Cosmos Predict 2, used in GR00T-Dreams, is coming soon to Hugging Face, featuring performance enhancements for high-quality world generation and reduced hallucination.

NVIDIA Isaac GR00T-Mimic, a blueprint for generating exponentially large quantities of synthetic motion trajectories for robot manipulation, using just a few human demonstrations.

Open-Source Physical AI Dataset, which now includes 24,000 high-quality humanoid robot motion trajectories used to develop GR00T N models.

NVIDIA Isaac Sim 5.0, a simulation and synthetic data generation framework, will soon be openly available on GitHub.

NVIDIA Isaac Lab 2.2, an open-source robot learning framework, which will support new evaluation environments to help developers test GR00T N models.

Lebaredian touted how GR00T N1.5 can speed up development: “Developers use these dreams to bulk up training data, improving model performance, and reducing the need to manually capture teleoperation data by a factor of 20. Our research team trained GR00T N1.5 using Dreams generated in 36 hours versus what would have taken three months for a human to manually capture.”

Can developers use RTX PRO 6000, synthetic data generation, and simulation to build robots besides humanoids?

“Essentially, if you think about what a humanoid robot is, it’s kind of a superset of many of the other types of robots,” Lebaredian replied to The Robot Report. “It has locomotion. It could move around like an AMR does. It has arms that can pick in place, like a robot manipulator.”

“One of the reasons why we like to focus on humanoids is if you can solve the humanoid problem, all the other problems in robotics kind of fall out naturally from there,” he asserted. “So the very same process we use to generate the synthetic data and then to test them apply to any type of robot. We see a lot of use cases for humanoid robots and a great lack of data.”

Foxconn and Foxlink are using the GR00T-Mimic blueprint for synthetic motion manipulation generation to accelerate their robotics training pipelines. Agility Robotics, Boston Dynamics, Fourier, Mentee Robotics, NEURA Robotics, and XPENG Robotics are simulating and training their humanoids using Isaac Sim and Isaac Lab.

Skild AI is using the simulation frameworks to develop general robot intelligence, and General Robotics is integrating them into its robot intelligence platform.

Foxconn’s collaborative nursing robot is one example of smart hospital applications developed using NVIDIA technologies. Source: Foxconn

NVIDIA Blackwell systems available to robot developers

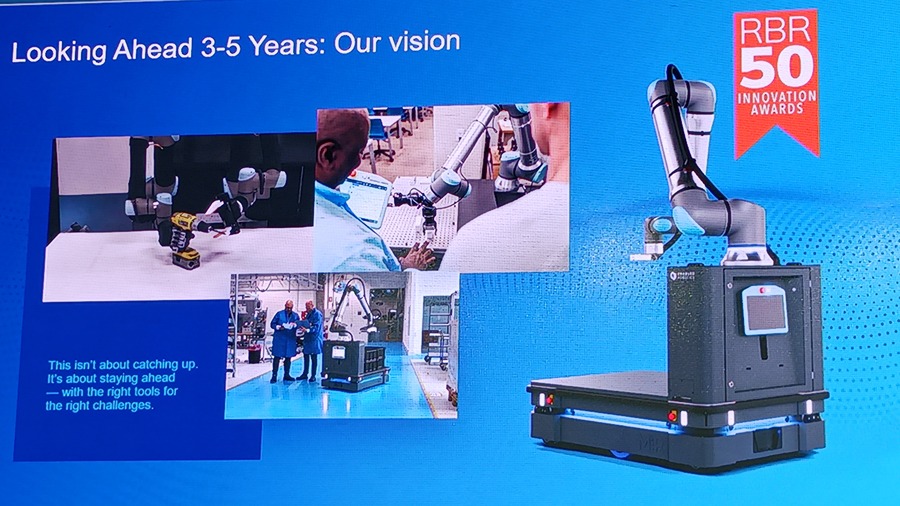

Global systems manufacturers are building NVIDIA RTX PRO 6000 workstations and servers. NVIDIA said it offers a single architecture to easily run robot development workloads across training, synthetic data generation, robot learning, and simulation. This is part of its strategy of creating “AI factories” with partners such as Foxconn.

Cisco, Dell Technologies, Hewlett-Packard Enterprise, Lenovo, and Supermicro have announced RTX PRO 6000 Blackwell-powered servers, which will be used for things such as quantum computing research. Meanwhile, Dell Technologies, HPI, and Lenovo have announced NVIDIA RTX PRO 6000 Blackwell-powered workstations.

When more compute is required to run large-scale training or data-generation workloads, developers can tap into Blackwell systems like GB200 NVL72 — available with NVIDIA DGX Cloud on leading cloud providers and NVIDIA Cloud Partners — to achieve up to 18x greater performance for data processing, said NVIDIA. Developers can deploy their models to NVIDIA Jetson AGX Thor, coming soon, to accelerate on-robot inference and runtime.

Developers can deploy their robot foundation models to the Jetson Thor platform. The company said it is also coming soon to speed up on-robot inference and runtime performance.

NVIDIA also announced the following:

RTX PRO Blackwell servers deliver acceleration for AI, design, engineering, and business applications for building IT infrastructure with the new NVIDIA Enterprise AI Factory validated design. Source: NVIDIA

Responses